Forge Architecture

The single biggest cost of any piece of code is usually maintenence. Porting to another platform is a close second. Commonly to port code you’ll see a lot of things that look like

#ifdef WIN

someWinAPICall()

#elif PSX

someProprietaryCall()

#endif

in the simplest case, anyway. If something’s more complex you might have whole functions replaced, or if something is sufficiently complex, an interface with platform-specific objects behind it. At worst many codebases end up with a melange of code for that system or not, and code flow becomes both a mess and a mystery.

To me the ideal is that the code for a platform should be readable as is. Code not for that platform should be compiled in, in its own files. Code for other platforms can be kept out of the build simply by not supplying it to the compiler. Other than platform-specific optimisations (which always end up polluting code down the line), keeping the platform-specific code as confined as possible.

Interfaces, not implementations

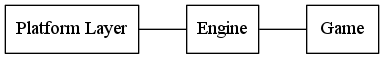

Done perfectly, isolated correctly, the contents of the platform-specific code don’t matter. In fact, when designing the architecture of an engine, only the interfaces of components matter. So while many diagrams might look like this

really it’s the lines that matter. In this ideal state, you can replace that platform-specific code with different code, specific to a different platform. Or perhaps you’ve isolated your renderer, and you can hence replace it with a different renderer. Or your input stack, or your network stack.

Which thing you want to be able to replace fundamentally affects that interface. If you’re replacing a renderer, you probably want an interface in the traditional sense - a fully pure-virtual base class, in C++ terms. If you don’t want runtime swapability, then there are static equivalents, where you might have a header defining a class, and multiple implementations, compiled in as required. At the far end you might want it to be so dynamic that the user can swap one of the runtime files to change the behaviour. This is where interface design really matters.

Reusability and Rewritability

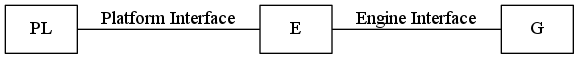

If I used the above diagram as the highest-level view of an engine design

(and I intend to) the things I’d need to be the most careful about are the

Platform Interface and Engine Interface. Both in the traditional sense

of what data crosses them and in what format, and in how those bridges are

built. If G lives in a dynamic library, then given the write design it

should be possible to edit and rebuild the code while the game is running.

This presents some problems when it comes to memory allocation. On Windows, every dll potentially can have its own C runtime, and its own memory allocator. Any memory allocated must be deallocated by the same allocator instance, so if a dll is unloaded, and was the ownly user of its allocator, it must deallocate all its memory, or nothing else will be able to do so correctly.

Obviously this is not ideal if you’re trying to reload the main portion of a game’s code. However, if dlls and their host program were built with the same runtime, the allocator should be shared, so at least on windows this problem can just be ignored. But if this isn’t the case on other platforms the way to fix it is to have the platform interface include access to the appropriate allocator

Platform Interface

So, what goes in here? What does the platform need to provide at its most basic? What can’t be inferred or created in a higher layer? Memory management is one potential answer, and definitely needed if we want to be as safe as possible. All the other parts of the answer I can currently think of are forms of input and output. We need a video outout buffer, the screen, or a window pane. We need an audio output buffer, likely the most simple part. And we need information about how the user is trying to send commands, usually via the mouse, keyboard, and gamepads.

Additionally, we’ll need some control over these - we might want to request a particular screen resolution or mode, or there may be some output in the input devices we want to use (e.g., vibration). We might also want to request particular behaviour of the audio system - e.g., sample rate. But creating an interface which should cover all eventualities for all platforms shouldn’t be too hard given the above.

Oh but it is

Of course, this all depends on what’s available on what platforms, and what their APIs make available to you. On windows the above is all certainly possible, and with a bit of work you could use hardware accellerated audio and video, all controlled from the engine layer. However… on modern console platforms you rarely get access so low level, and using this architecture, you might find that some methods you’ve put in the Engine layer (e.g., playAudioSample) have to make direct API calls.

This just leads to the previous principles needing to be implemented everywhere. Any coherant system might need to be swapped out on a particular platform to deal with quirks of that platform, and your atchitecture, partivularly its interfaces needs to be designed to allow that